GLiNER2 for Agentic Information Extraction

The future belongs to models with architectures crafted, optimized, and deployed for focused tasks. Fine-tuned small language models are now competing directly with trillion-parameter models on specific tasks, upending scaling laws once written in stone.

GLiNER proved that purpose-built architectures can match and even outperform frontier models many times their size on zero-shot Named Entity Recognition (NER), one of the most fundamental Natural Language Processing (NLP) tasks.

In the agentic era, however, simply identifying named entities is not enough. In order to truly flourish, agents require a deep contextual understanding of their domain. This includes identifying and understanding not only the primary topics and themes in a given dataset, but the relationships between them. GLiNER2 addresses this by expanding GLiNER’s capabilities beyond NER to include text classification, structured data extraction, and entity relation extraction.

And while GLiNER2’s zero-shot performance on these downstream tasks is already competitive with frontier models like GPT-5, its most compelling feature is its fine-tunability. Fine-tuned GLiNER2 models can match or outperform fully supervised, domain-specific state-of-the-art systems many times their size, demonstrating that performance need not be sacrificed for efficiency.

In this post, we will examine GLiNER2, highlighting how its multi-task capabilities, compact, schema-driven design, and adaptability make it the logical choice for modern information extraction in the era of AI agents.

Agentic workflows require context and information extraction

GLiNER’s release in late 2023 marked a paradigm shift for modern Named Entity Recognition—reframing zero-shot entity extraction as a quick and efficient matching task as opposed to a slow and costly generative task.

However, much has changed since 2023. The emergence of AI agents has underscored the importance of context, creating a new paradigm in AI engineering: context engineering. This means that simply extracting entities from unstructured text is no longer enough.

Agentic capabilities like planning, tool selection, and self-reflection require models that understand entity ecosystems: who did what, to whom, when, and how. They also depend on fast, efficient routing of tasks and queries to the models best suited to perform them.

GLiNER2 offers two critical architectural advantages for agentic systems. First, it extracts rich and meaningful context from unstructured data in the form of entities, relations, and classes, offering agents critical situational awareness. Second, its size and adaptability means that it can be fine-tuned to specialize in any number of tasks and be deployed with ease. Using small, specialized models in AI agents cuts latency and ultimately frees up larger models to focus on what they do well: reasoning, thinking, and planning.

GLiNER2 extracts information and context from unstructured data

GLiNER2 builds on the success of GLiNER by expanding its capabilities to include relation extraction, structured data extraction, and text classification.

Here’s a closer look at GLiNER2’s capabilities and how they complement agentic systems:

NER. Named Entity Recognition identifies and categorizes key entities such as names, locations, and organizations within unstructured text. It enables agentic systems to resolve references and accurately identify the "who, what, and where" required to execute specific tool calls.

Relation extraction. Relation extraction identifies the semantic connections and dependencies between identified entities within a sentence. It provides agentic systems with the logic needed to build dynamic knowledge graphs, allowing an agent to reason about how one data point (e.g., a "Product") impacts another (e.g., a "Supplier") without human intervention.

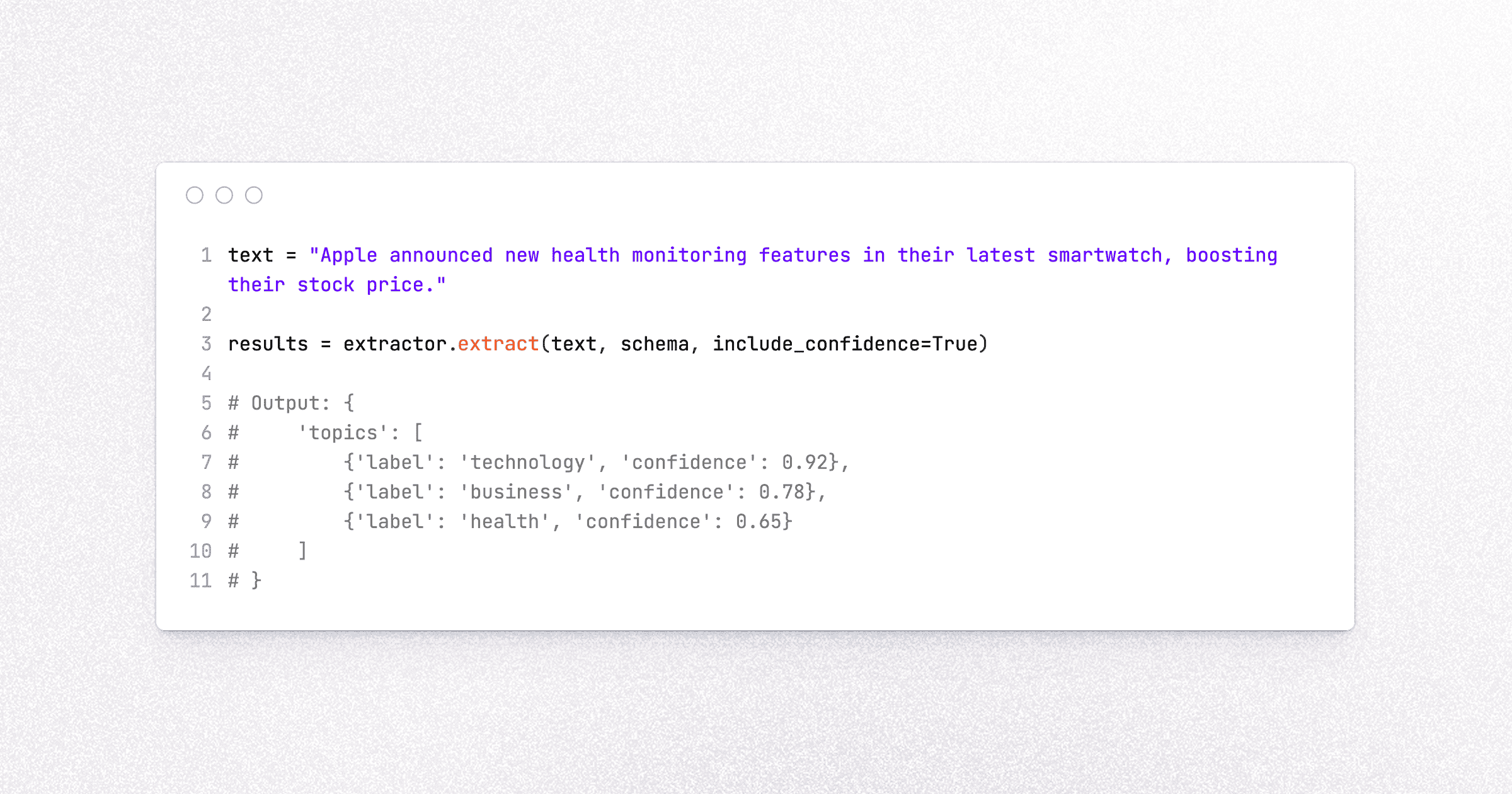

Structured data (JSON) extraction. Structured data extraction transforms raw text into predefined formats like JSON by mapping spans to a specific schema. It allows agentic systems to reliably pass clean, validated data to downstream APIs and database schemas without formatting errors.

Text classification. Text classification assigns predefined categories or labels to entire segments of text based on their content. It serves as a high-speed router for agentic systems, allowing them to determine intent and select the most appropriate workflow or sub-agent for a given task.

GLiNER2 achieves the highest average accuracy in zero-shot text classification among open-source baselines and closely matches GPT-5 in overall NER performance on the CrossNER benchmark, despite being a general-purpose model running entirely on CPU at a fraction of the cost and latency. The reason this model can hold its own against models many times its size trained on enormous text corpora, is that it employs a bidirectional encoder transformer architecture. This means two things:

Bidirectional models use the text before and after a given token to capture rich, holistic contextual representations for every part of the input simultaneously.

Encoder models excel at understanding language, requiring significantly fewer parameters than generative models. Because they aren't burdened by sequential token prediction, they can dedicate their entire parameter budget to discriminative matching and span identification, resulting in higher efficiency and superior extraction performance.

GLiNER2’s unique unification of these four fundamental NLP tasks makes it an excellent choice for adding structure and extracting information and context from unstructured text.

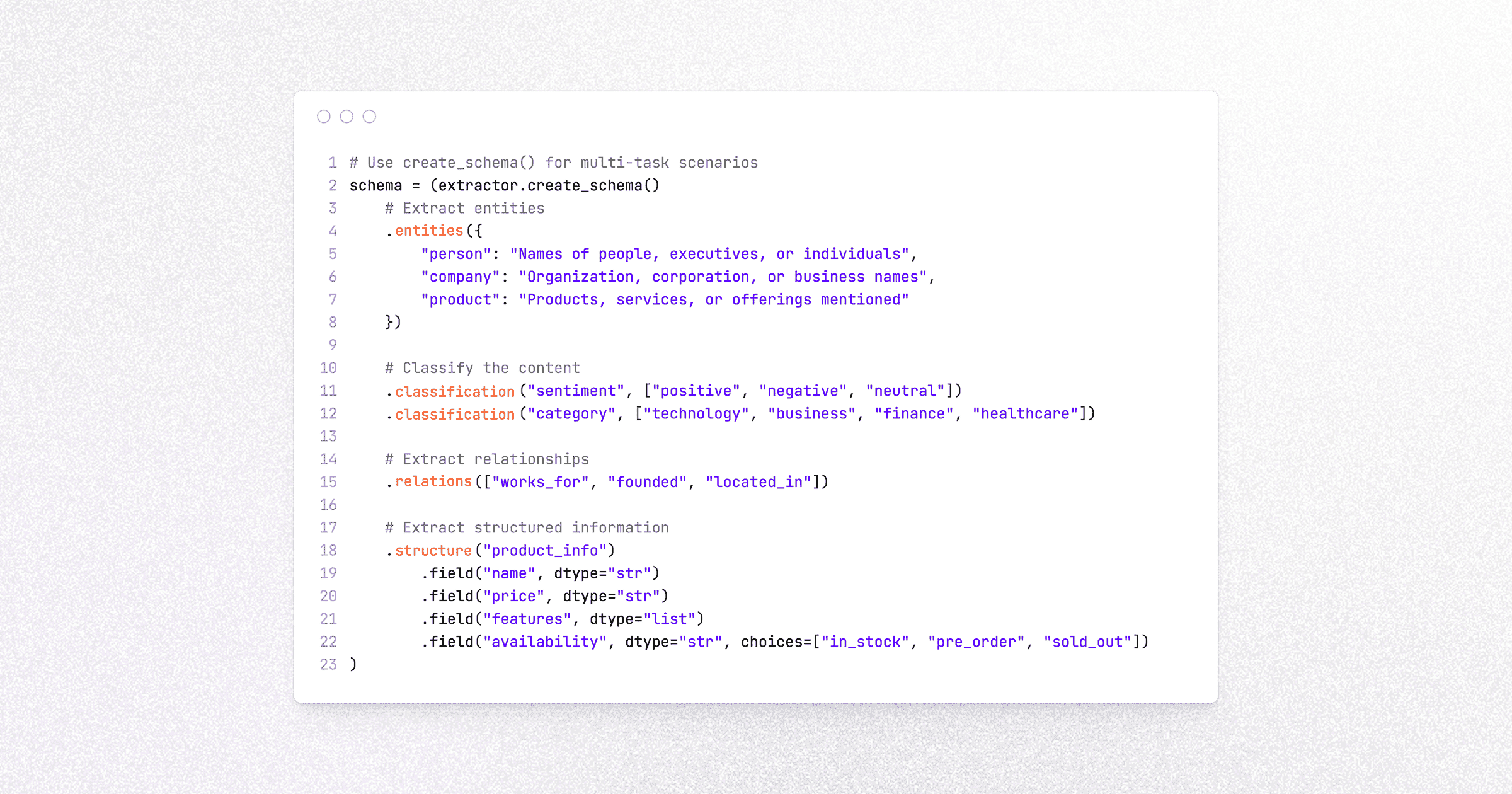

Four tasks in a single forward pass

Like the original GLiNER model, GLiNER2 processes input texts and target labels bidirectionally, projecting them into a shared latent space. However, in a departure from the original GLiNER architecture, GLiNER2 leverages a declarative schema-driven interface that enables simultaneous entity, relation, and structured data extraction, and text classification.

At only 205M parameters, GLiNER2 can perform four tasks in a single forward pass on a CPU. Its bidirectional encoder architecture and use of a schema-driven interface gives it several distinct advantages compared to LLMs, including:

Speed and efficiency. Generative LLMs are autoregressive, meaning that they predict the next token one at a time, creating a sequential bottleneck. GLiNER2 processes the entire input at once and extracts multiple fields in a single parallelizable step.

Deterministic accuracy. Encoder models are not generative, meaning they do not produce hallucinations. Instead, each output is a direct mapping, or score, of the input text against your schema in a shared latent space.

Reliable structured outputs. Using encoder models also means no fighting with the model to produce structured outputs and adhere to a fixed schema. The output format for all GLiNER2 tasks is predefined.

By consolidating disparate extraction tasks into a single forward pass, GLiNER2 goes beyond simple data extraction to capture the underlying meaning and relationships within unstructured text. This creates the high-fidelity grounding necessary for agentic applications to maintain precise context and navigate complex workflows without the overhead, cost, and latency of large generative models.

Fine-tuned GLiNER2 allows for rapid, private, and precise specialization

GLiNER2 maintains impressive zero-shot capabilities across several tasks, compared to both open-source encoder models and GPT-5. It achieves the highest average accuracy in zero-shot text classification among open-source baselines and rivals GPT-5 in NER capabilities across several domains.

Despite its impressive performance and ability to perform four tasks in a single forward pass, GLiNER2’s defining feature is its fine-tunability. At only 205 million parameters, GLiNER2 is incredibly small. Fine-tuning small language models is significantly faster and simpler than fine-tuning large language models, requiring less data, technical overhead, and compute.

GLiNER2 models can be fine-tuned in as little as three minutes on as few as ten additional task-specific examples, to achieve significantly better results compared to zero-shot performance. This essentially transforms fine-tuning from a time- and data-intensive task requiring complex pipelines to a simple, trivial step in the agent deployment pipeline. And because it can be fine-tuned locally, it is well-suited for workflows involving highly sensitive or private data. There are several tasks for which fine-tuned GLiNER2 models are especially useful, including:

Prompt hack detection. A fine-tuned GLiNER2 model can identify adversarial inputs and injection attempts before they reach the core model.

Hallucination detection. GLiNER2 can detect hallucinations by extracting factual claims from a model's response and comparing them against a provided reference text to flag entities or relations that have no supporting evidence.

Guardrails. GLiNER2 can enforce content and behavioral boundaries, flagging outputs or inputs that fall outside defined safe parameters, like toxic language.

Model routing. By classifying the intent and complexity of incoming requests, GLiNER2 can direct tasks to the most appropriate model in a heterogeneous stack. Queries requiring complex reasoning can be routed to larger models and simpler queries can be routed to smaller models.

Summary

GLiNER2 is a small language model built for the agentic era. A CPU-first model that extracts context, meaning, and structure from unstructured text, GLiNER2 is the logical choice for agentic workflows that demand small, precise, and low-latency models. And because GLiNER2 uses a bidirectional encoder architecture and is easily fine-tunable, it can compete directly with models many times its size for specific, well-defined tasks, offering a smaller, cheaper, faster alternative without sacrificing performance. In a field still captivated by scale, GLiNER2 is a reminder that the best model for the job is isn’t always the largest one. It is the most specialized.

Want to fine-tune a GLiNER2 model in less than five minutes? Sign up for our waitlist here.

Additional resources

Sources cited

Zaratiana, U., Pasternak, G., Boyd, O., Hurn-Maloney, G., & Lewis, A. (2025). GLiNER2: An Efficient Multi-Task Information Extraction System with Schema-Driven Interface (arXiv:2507.18546). arXiv. https://arxiv.org/abs/2507.18546

Zaratiana, U., Tomeh, N., Holat, P., & Charnois, T. (2023). GLiNER: Generalist Model for Named Entity Recognition using Bidirectional Transformer (arXiv:2311.08526). arXiv. https://arxiv.org/abs/2311.08526